This showcase how Open Voice OS runs on the Mark II device and how it connects to a local AI powered by LLAMA with the Mistral 7B OpenOrca to answer basic questions!

Wow, great answers and response times!

Just curious, what TTS engine are you using?

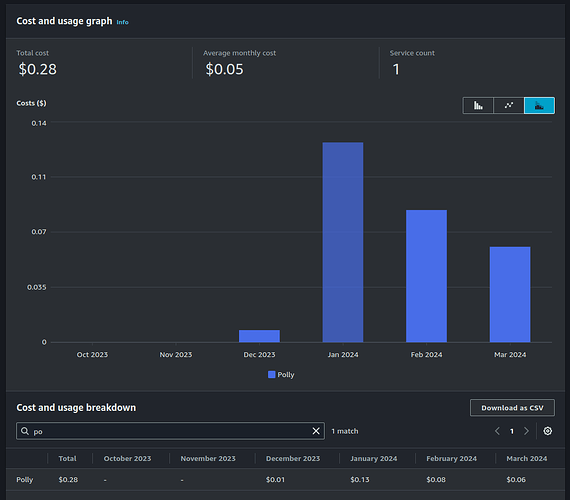

I’m using Polly with Danielle voice and the neural engine. Part of the PR I made yesterday ![]()

I don’t have an AWS access key. Is there any way to get one without giving up a credit card #?

Just adding a tiny information about the Local AI used in this demo, the persona defined was:

I am a helping assistant who gives very short and factual answers in maximum 40 words.

@goldyfruit do you have any more info on this? I would love to get this setup at home. My question is for the Local AI used in the demo is it a separate server and if so, what is it running on? I have a bunch of misc pi’s, Odroids and older PC’s and thus the question. This would be fun to see the MARK II reacting way faster to questions. thanks!

@Travis_Andrew The local AI is running on a dedicated Kubernetes cluster with an old Nvidia Tesla P40 GPU.

The local AI is handled by llama.cpp and the model used at the time was Mistral 7B.

In order to have the Mark II (OVOS) interacts with the local AI, I’m using this skill: GitHub - OpenVoiceOS/skill-ovos-fallback-chatgpt.

I hope it helps.