As Mark 1 is getting older and older, running a voice assistant on it might be a challenge!

This is the reason why HiveMind was born, to solve this challenge by deporting the compute part on a more powerful external device.

This video demonstrates how Open Voice OS performs on a Mark II (Raspberry Pi 4) against HiveMind Satellite on a Mark 1 (Raspberry Pi 3B) .

The HiveMind Core and Open Voice OS parts are running in a Kubernetes cluster on a Dell R720 server (plenty of compute power .

Quick overview of the pods running.:

$ kubectl get pod -o wide -n ovos -o wide -w

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

hivemind-extras-7c575f7b58-nqg85 1/1 Running 0 34h 10.233.65.26 k8s-worker-3.home.lan <none> <none>

hivemind-listener-5dc564f875-fd2qz 1/1 Running 0 34h 10.233.65.36 k8s-worker-3.home.lan <none> <none>

ovos-core-56575684dd-zkjqg 2/2 Running 0 34h 10.233.65.32 k8s-worker-3.home.lan <none> <none>

ovos-skills-64d84dc974-xzcn9 7/7 Running 0 6h47m 10.233.65.1 k8s-worker-3.home.lan <none> <none>

ovos-skills-extras-86f44f55c8-g8mm6 3/3 Running 0 33h 10.233.65.13 k8s-worker-3.home.lan <none> <none>

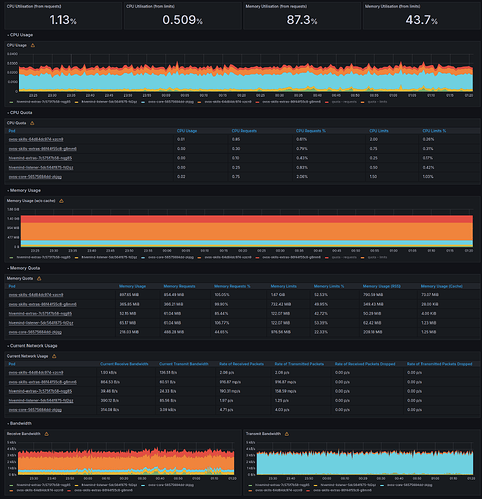

Here is quick resources consumption graph related to the HiveMind and Open Voice OS components running on the Kubernetes cluster.

Feel free to ask more details

4 Likes

Very cool!

AFAIK this is the first mark1 hivemind satellite, i’ve wanted to do it for a while but never got to it. really brings a new life to the device

I want to improve the PHAL plugin even more

great job as usual!

2 Likes

Is this still being updated and worked on? I loved the Mark 1. I am willing to have the 3B still installed in the Mycroft Mark 1 enclosure and offsetting what is required with say a pi 4 as my understanding is that HiveMind can have a master/slave kinda idea to run, right I’d like to be pointed more docs and setup for this to try out.

Thanks!

Yes it is, check this thread [HOWTO] Begin your Open Voice OS journey with the ovos-installer 💖 😍

When using the ovos-installer you can select the server profile on the host that you want to be the HiveMind master node. Once the master is installed, run the ovos-installer on the Mark 1 and choose the satellite profile.

1 Like

@goldyfruit

Do you mind sharing your K8s deployment/statefulset/replica set files? I’ve moved everything in my environment to 3 k8s nodes (home assistant, plex, overseer, sonarr, radarr, etc.) and I want to deploy ovos/hivemind there as well. But, the documentation for the docker container is a little confusing.

QthePirate:

Do you mind sharing your K8s deployment/statefulset/replica set files? I’ve moved everything in my environment to 3 k8s nodes (home assistant, plex, overseer, sonarr, radarr, etc.) and I want to deploy ovos/hivemind there as well. But, the documentation for the docker container is a little confusing.

The Docker containers documentation is only written for Docker or Podman, not for Kubernetes and I don’t intent to extend it.

Here are the HiveMind manifests, don’t copy/paste it as you will have to replace few things (image, storage class, etc…) .

apiVersion: v1

kind: Service

metadata:

name: hivemind-listener

namespace: ovos

spec:

ports:

- port: 5678

protocol: TCP

targetPort: 5678

selector:

app: hivemind-listener

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: hivemind-share-claim

namespace: ovos

spec:

storageClassName: nfs-csi

accessModes:

- ReadWriteMany

resources:

requests:

storage: 256Mi

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: hivemind-listener

name: hivemind-listener

namespace: ovos

spec:

replicas: 3

revisionHistoryLimit: 3

selector:

matchLabels:

app: hivemind-listener

template:

metadata:

labels:

app: hivemind-listener

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- ovos-core

- ovos-skills

- hivemind-extras

- hivemind-listener

topologyKey: kubernetes.io/hostname

namespaceSelector: {}

containers:

- name: hivemind-listener

image: registry.smartgic.io/hivemind/hivemind-listener:alpha

imagePullPolicy: Always

command:

[

"hivemind-core",

"listen"

]

ports:

- containerPort: 5678

protocol: TCP

volumeMounts:

- name: hivemind-share

mountPath: /home/hivemind/.local/share/hivemind-core

- name: hivemind-listener-cm

mountPath: /home/hivemind/.config/hivemind-core/server.json

subPath: server.json

resources:

requests:

cpu: "500m"

memory: "64M"

limits:

cpu: "750m"

memory: "128M"

readinessProbe:

tcpSocket:

port: 5678

initialDelaySeconds: 10

periodSeconds: 5

livenessProbe:

tcpSocket:

port: 5678

failureThreshold: 5

periodSeconds: 5

volumes:

- name: hivemind-listener-cm

configMap:

name: hivemind-listener-cm

- name: hivemind-share

persistentVolumeClaim:

claimName: hivemind-share-claim

goldyfruit:

apiVersion: v1

kind: Service

metadata:

name: hivemind-listener

namespace: ovos

spec:

ports:

- port: 5678

protocol: TCP

targetPort: 5678

selector:

app: hivemind-listener

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: hivemind-share-claim

namespace: ovos

spec:

storageClassName: nfs-csi

accessModes:

- ReadWriteMany

resources:

requests:

storage: 256Mi

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: hivemind-listener

name: hivemind-listener

namespace: ovos

spec:

replicas: 3

revisionHistoryLimit: 3

selector:

matchLabels:

app: hivemind-listener

template:

metadata:

labels:

app: hivemind-listener

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- ovos-core

- ovos-skills

- hivemind-extras

- hivemind-listener

topologyKey: kubernetes.io/hostname

namespaceSelector: {}

containers:

- name: hivemind-listener

image: registry.smartgic.io/hivemind/hivemind-listener:alpha

imagePullPolicy: Always

command:

[

"hivemind-core",

"listen"

]

ports:

- containerPort: 5678

protocol: TCP

volumeMounts:

- name: hivemind-share

mountPath: /home/hivemind/.local/share/hivemind-core

- name: hivemind-listener-cm

mountPath: /home/hivemind/.config/hivemind-core/server.json

subPath: server.json

resources:

requests:

cpu: "500m"

memory: "64M"

limits:

cpu: "750m"

memory: "128M"

readinessProbe:

tcpSocket:

port: 5678

initialDelaySeconds: 10

periodSeconds: 5

livenessProbe:

tcpSocket:

port: 5678

failureThreshold: 5

periodSeconds: 5

volumes:

- name: hivemind-listener-cm

configMap:

name: hivemind-listener-cm

- name: hivemind-share

persistentVolumeClaim:

claimName: hivemind-share-claim

Thank you so much! I appreciate it!

Of course I wouldn’t copy and paste; I wanted to make sure I wasn’t missing anything on the volume mounts and ports.

Do you have the one for your ovos pods as well? it’s mostly the environment values/variables I want to verify I’m getting correct.,

I can write up a quick kubernetes how-to once in a Github repo you can link to somewhere if you want once I get it working.

![]() ).

).![]()