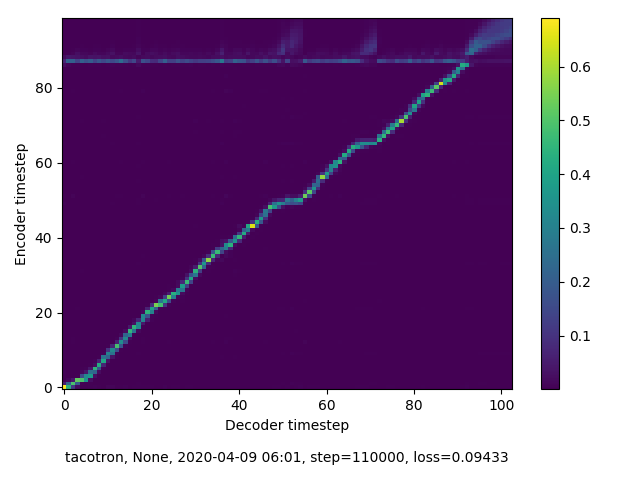

I’m currently training my own german voice for “free-to-use” by community based on mimic2.

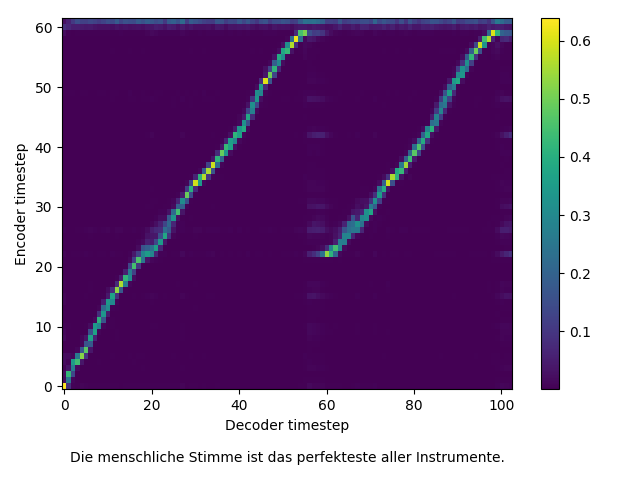

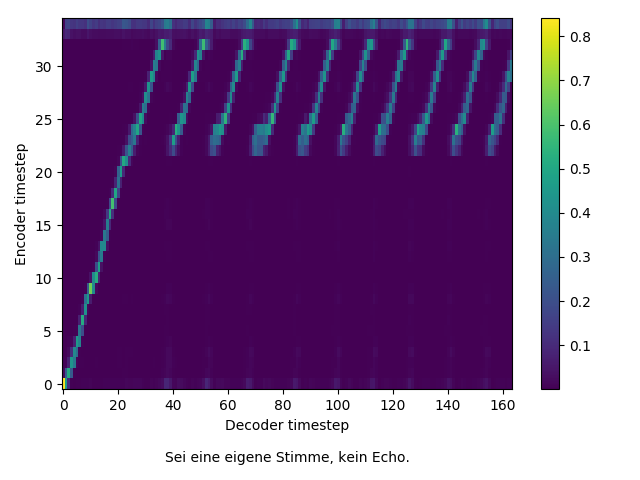

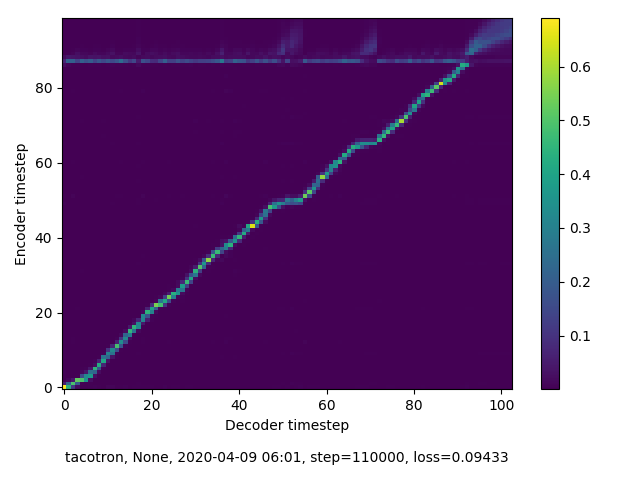

But alignement graph shows always a high horizontal line and produces some too robotic speech.

Will this line go away with more training steps (currently on 110k) or how can i fix this?

The generated tts wav file is available here:

https://drive.google.com/open?id=1A6cMpOxRtopXRwLpFeBCr31cBUxWr_gF

The alignement example on GitHub - MycroftAI/mimic2: Text to Speech engine based on the Tacotron architecture, initially implemented by Keith Ito. does not have this problem.

Would be thankful for any suggestion on how to fix this.

Thorsten

I was never able to get this to go away with different datasets, including LJ.

Thanks for your reply.

Do you know if the line is the reason for the voice sounding robotic with little reverb?

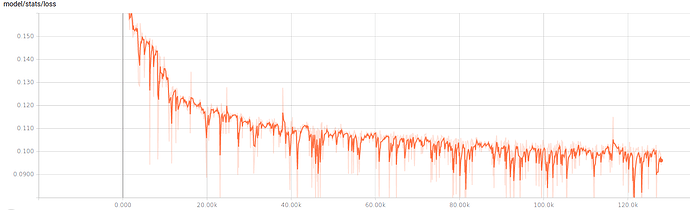

How many steps did you train during your tests? I’m currently on step 127k.

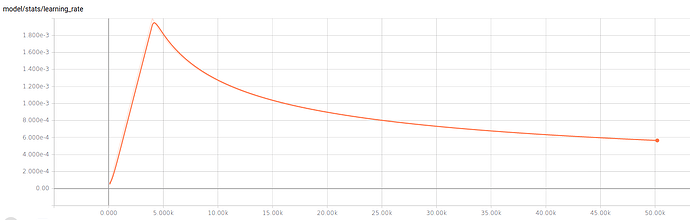

up to 400k at one point. It never got better. Basically 50k is the cut off…if alignment hasn’t happened by then I’d kill it and try something else. Also the large spikes were places I’d stop and backup and start again on. That seemed to help a bit.

Thanks for your reply.

400k training steps sounds like a lot of invested compute time.

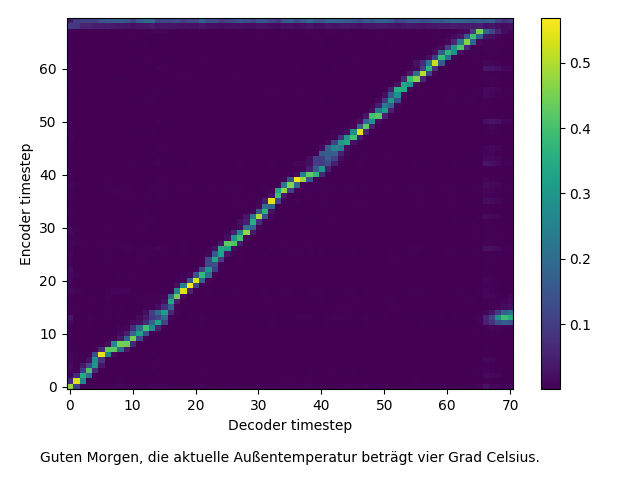

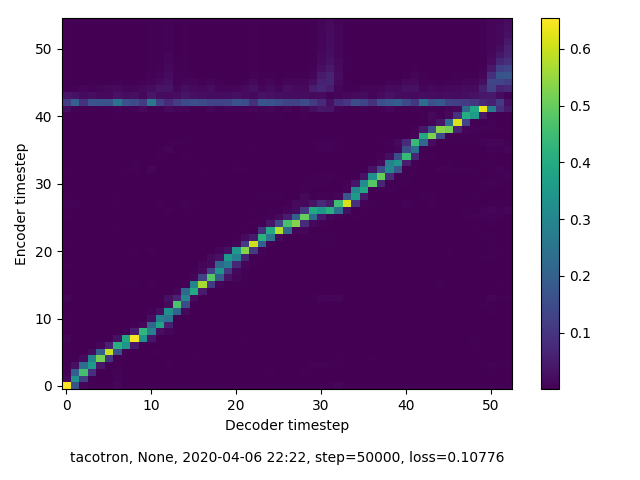

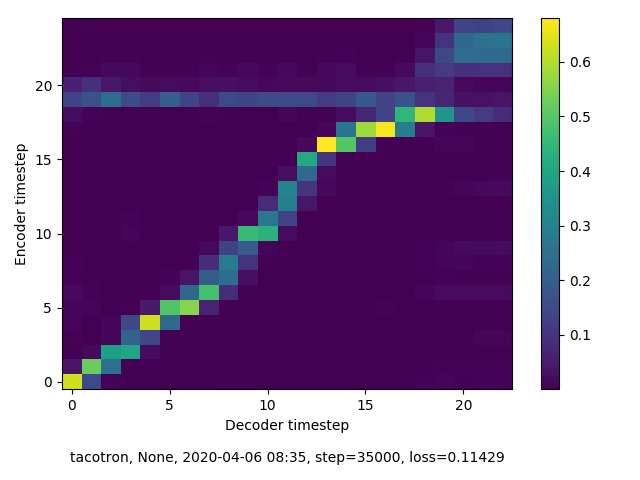

I’m currently running a training using original tacotron1 implementation (GitHub - keithito/tacotron: A TensorFlow implementation of Google's Tacotron speech synthesis with pre-trained model (unofficial)) with tensorflow 1.6 instead of mimic2 implementation and tensorflow 1.8 on the same ljspeech dataset.

The results and alignments seems (much) better.

Could be interesting to check the reason for the different results is a code change in mimic2 or use of tensorflow 1.6 instead of 1.8?

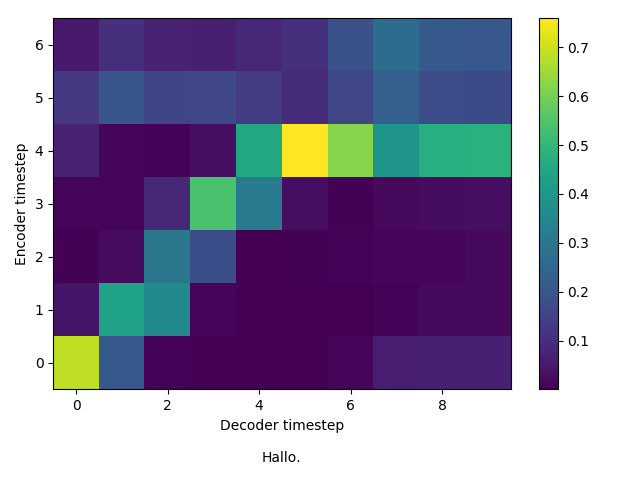

Here are some sample outputs from mimic2 vs. tacotron1 training.

Sample WAVs

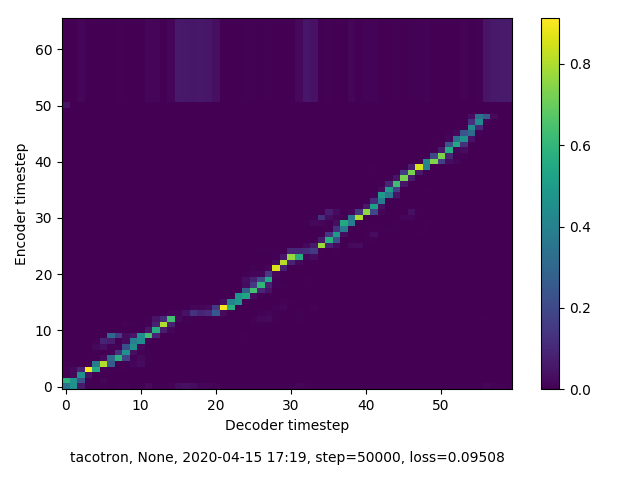

Mimic2 alignment graphs

alignment 35k and 50k steps

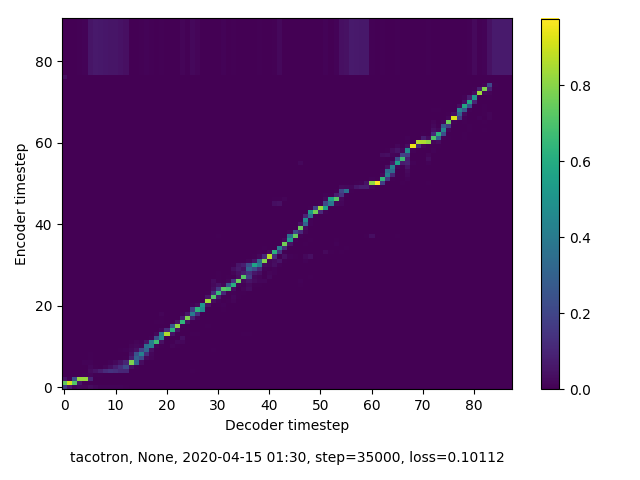

Tacotron Version 1 (Keithio) graphs

alignment 35k and 50k steps

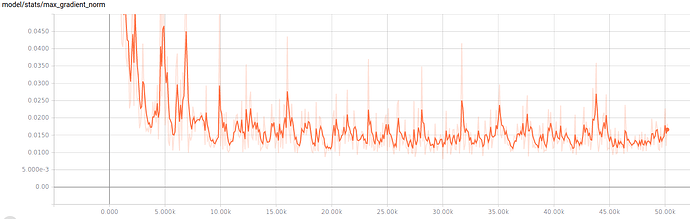

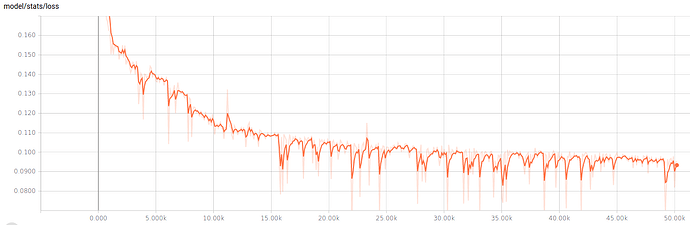

Tensorboard (at 50k steps)

2 Likes

This replicates what I experienced as well.

So you tried tacotron1 too, instead of mimic2 fork?

Yes. This was how I built the first model I used for my local TTS, and it sounded pretty good.

Was this your 400k training or to how many steps did you train your model till being good enough for every day usage?

I did 400k a couple of times on each. The model I ended up using was about 350k on tacotron (1). None of the current mimic 2 models ever well worked for me, there’s a previous commit before the attention stuff was added that worked closer to tacotron.

I’ve been waffling about trying a moz tts t2 modeling run with another dataset, but haven’t gotten the time to get it set to go quite yet.