Mimic 3: Mycroft’s newer, better, privacy-focused neural text-to-speech (TTS) engine. In human terms, that means it can run completely offline and sounds great. To top it all off, it’s open source.

Mimic 3 can:

- Speak more than two dozen languages, with over 100 English voices available

- Run completely offline on devices like the Raspberry Pi 4

- Control speaking rate and variability

- Use SSML (Speaking Synthesis Markup Language) to switch voices, add pauses, and pronounce words phonetically within a single document

- And more…

Mimic 3 is for anyone who wants:

- To integrate a state-of-the-art text-to-speech system into just about anything;

- A personal, offline text-to-speech system that sounds better than ever;

- A premium text-to-speech cloud service, but also cares about the privacy of their data;

- To contribute their language expertise (or voice) for everyone’s benefit; or

- To hack on open source code.

Using Mimic 3

Obligatory self-plug:

The easiest way to make use of Mimic 3 is to grab yourself a Mycroft Mark II, which will use Mimic 3 as its standard TTS engine. Pre-orders for the first two production runs of the Mark II have sold out. Get in quick for the third production run to have your Mark II shipped in November 2022.

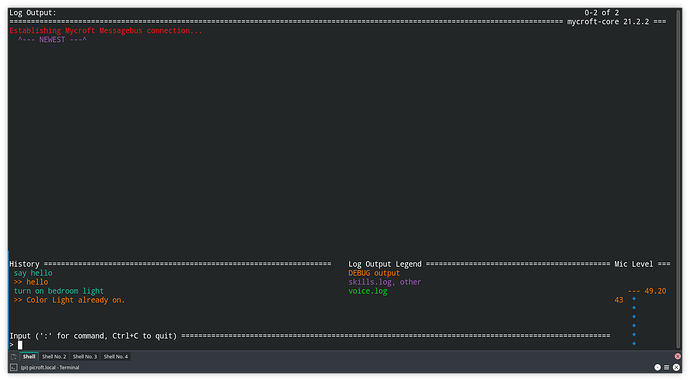

Mimic 3 can also be used on any existing Mycroft installation with a Raspberry Pi 4 or better using our TTS plugin.It can be the voice of your Mycroft assistant, computer, or next IoT project. It is intended to run completely offline on devices like the Raspberry Pi 4, and works out of the box with other open source software like Home Assistant and Node-RED. Mimic 3 includes a web API as well as a command-line interface, allowing it to be used easily in scripts and automations.

Mycroft AI has pre-trained voices for Mimic 3 in 25 different languages, with over 100 individual English speakers available. Most voices are based on publicly available datasets from worldwide volunteers, such as the indefatigable Thorsten Müller. We are always on the lookout for datasets from new languages, or higher-quality datasets for a currently supported language. All it takes is one voice to make a difference for everyone!

We have also recorded a number of new datasets in a more controlled environment with phonetically-diverse data, increasing the voice quality significantly. Premium voices trained from these Mycroft datasets will be available on the Mark II, for Mycroft Members, and under commercial licensing agreements.

In addition to basic voice controls like speaking rate and variability, Mimic 3 supports a subset of Speech Synthesis Markup Language (SSML), allowing you to script who’s speaking and how. With SSML, you can create a single document that switches voices (and even languages), includes timed pauses between sentences, and manually adjusts volume, speed, etc. See the docs for details on exactly what SSML tags are currently supported.

See our documentation for all the options.

How does it Work?

Mimic 3 uses a cloud-quality machine learning model that has been scaled down to run on lower-end devices like the Raspberry Pi 4. Specifically, Mimic 3’s voices are phoneme or character-based PyTorch VITS models (based on the excellent work of Jaehyeon Kim) that are exported to the Onnx runtime. At runtime, Mimic 3 transforms your text into numbers that are fed to a voice model, which produces audio resembling the dataset it was trained on.

For some languages like English and German, large public datasets for individual speakers are available. The base models trained from these datasets were used as a starting point for different voices with smaller datasets in the same language (a process called “fine-tuning”). Many different voices were trained quickly with this approach, often overnight, on a handmade server with a few RTX 3090’s. We plan to release the training code soon so anyone with the right technical skills can train their own voice, with the ultimate future goal of integrating it into Mimic Recording Studio.

How Can I Help?

There are many ways you can help out the Mimic 3 project:

- Help us spread the word about Mimic 3:

- Join Mycroft Chat or the Community Forums and help other community members install and configure Mimic 3 for their own projects

- Provide feedback on existing voices, helping us prioritize which voices need to be tweaked or retrained

- Reporting bugs in the software or providing a pull request with a possible fix

- Contributing your language expertise and maybe your voice (drop your email in the form below ????)

Adding a new language or voice to Mimic 3 can vary in difficulty depending on two main factors:

- For new languages, Mimic 3 needs to know how to normalize and phonemize text. This involves turning everything in a sentence into words (“$5” = “five dollars”) and then converting those words into units of human speech (phonemes). Projects like espeak-ng, gruut, and lingua-franca provide Mimic 3 with this capability. The phonemization step can be skipped for languages where what’s written is very close to what’s spoken.

- Every voice comes from a dataset, which is just text with the corresponding spoken audio. An audiobook or someone speaking a list of prepared lines can be a dataset as long as the licensing allows for it. Importantly, each sentence (audio and text) needs to be separated out for training, so there is often manual work involved that can be difficult for non-native speakers. Lastly, the dataset needs to have a good variety of sounds from the language (“phonetically diverse”). This is usually not a problem if you have a dozen hours of audio data, but becomes critical with only one or two hours.